OneKey US Code Import from DT

Prerequisite

The following are the prerequisite conditions for OneKey US Code Import from DT:

| Area | Prerequisite Details |

|---|---|

| Reltio Tenant Configuration | To configure reltio tenant details, see Configure Connection. |

| SFTP Connections | Make sure to configure the CT SFTP connections in DT collection. |

| Availability of Files | Make sure that the SFTP location of customer Tenant is having files to load ~S3/CT_OK_US_CODES/input/OK_US_CODE*.flat |

Setup SFTP User and Communicate to Product Team

Configure the data tenant pipeline with customer Tenant S3 path details to copy the code for the subscribed country list.

-

Create Folder DT_OK_US_CODES in the root folder S3/CT_OK_US_CODES/input/.

-

Share and create Customer Tenant S3 Connector collection to data Tenant pipeline to copy the OK code files to CT S3 path.

OneKey US Code Load Overview

Customers who are subscribed to Reltio DataTenant can stream the entities/relations using DTSS setup, but RDM codes are not supported to this steam. Below approach is intended to load DT RDM codes into CT RDM tenants using IDP platform.

Import Pipeline Template

To import Pipeline Template

-

Connect to IDP default s3 bucket and go to the folder <bucket_name>/templates/product.

-

Download the MDM_Load_US_Code_CT_DTSS_<version>.json file template to local windows folder.

Note:

If there are multiple files prefixed MDM_Load_US_Code_CT_DTSS_* with different versions, download the latest version.

-

<DATABASE_NAME>, <CONNECTOR_ID> placeholders need to be replaced with database name and connector_id.

-

Login to IDP platform with valid credentials.

-

Go to Data Pipeline, click Task Group from Template and import the template using updated template file.

Note:

Process creates the pipeline task group after the successful import. This has to be used for execution.

PipeLine Steps

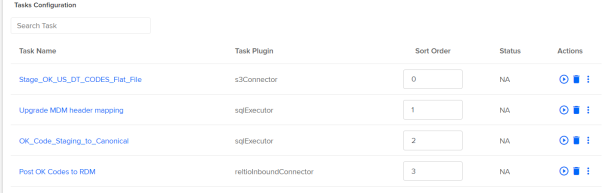

Once DT pipelines pushes the data into CT SFTP path. Users needs to process those files using below pipelines. This pipeline has four steps. First two are to move US codes rest is for Global Codes.

-

SqlConnector plugin- snowflake stage reads the data from OK Code table(US) then writes into DT S3 path ~DT:S3/DT_OK_US_CODES/<CONNECTOR_ID>/OK_US_CODE_TIMESTAMP.flat for US.

-

Based on the OK-RELTIO header mapping, data is populated in XREF table.

-

Based on the last run date that is maintained in PROCESS_LOG table, OK codes are converted into generic code format.

-

Post the OK codes to Reltio RDM tenant.

Configuration

Parameters needs to be updated:

-

Connector_ID for US: It is to pick the exported code files into connector specific folder.

-

COD_EXL: To exclude any specific codes, do not update the baseline header until it is required from business need.

-

IN_JSON: Cross reference for OK header to MDM header.

-

SCD_TYPE: TYPE_1

-

REFRESH_TYPE: FULL REFRESH

-

Task_SystemName: MDM_PROCESS_CONTROL_LOG table to maintain the last run date.

-

LND_SCHEMA: ODP_CORE_LANDING

-

STG_SCHEMA: ODP_CORE_STAGING

-

CTRY_DPND: Y

Special Instructions for Code Migration for Those Clients Who Have Migrated from MDM1.0 to MDM2.0

Clients who are currently using MDM1.0 Informatica workflow to load OK data and migrating to MDM2.0 IDP OK pipeline. Below are the steps that need to be followed to maintain the same WS canonical codes in the RDM tenant.

-

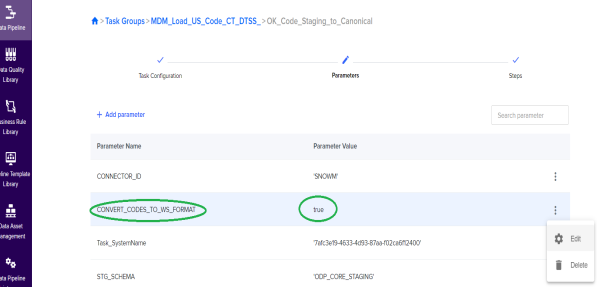

After importing the MDM_Load_US_Code_CT_DTSS pipeline, go to Code - Stage To Canonical task and chose parameters section to modify the parameter CONVERT_CODES_TO_WS_FORMAT as true.

Note:

By default, CONVERT_CODES_TO_WS_FORMAT can return false.

This code conversion can pick only the codes that can fall under the current run(delta). In order to convert all the codes available in staging, update the pipeline code's LAST_RUN_DATE in ODP_CORE_LOG.MDM_PROCESS_CONTROL_LOG table with 1900-01-01.

Troubleshooting

-

S3 connection error needs to be fixed in Entity Collections.

-

C_RDM table where load status is ERROR then refer the load_details and reprocess the codes.

-

ODP_CORE_STAGING.Generic_Invalid_codes have invalid codes. Fix the codes if there are invalid entries.